MADSys

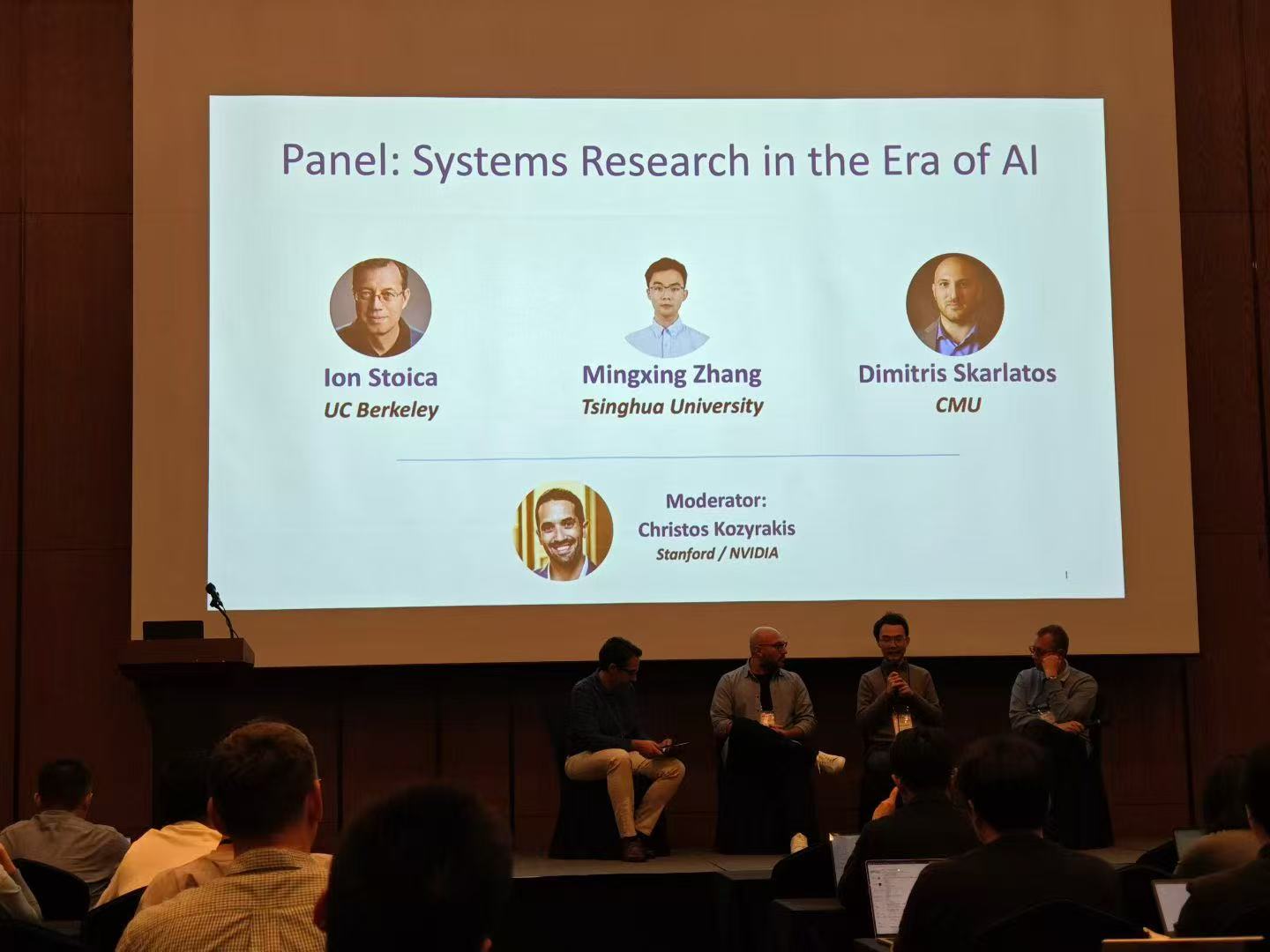

The MADSys (Machine Learning, AI, Big Data Systems) group is dedicated to the design, implementation, evaluation, and application of parallel and distributed systems. Our research spans various methods aimed at accelerating data processing. Although the foundational principles like caching, batching, and overlapping are consistent, the strategic and innovative application of these techniques allows system researchers to optimally utilize diverse hardware resources across different scenarios.

Recent News

Award

Sep 12, 2025 - Our “Trading storage for computation” technology solution received Huawei’s 2024 Olympus Award! Congratulations!

Jul 28, 2025 - Our joint project with Haizhi, “Joint Application Platform for Graph Models Based on Large-scale Graph Data Analysis Technology” has won the 2nd “Zu Chongzhi Award” - Frontiers of Artificial Intelligence Innovation Award. Congratulations!

Apr 22, 2025 - Congratulations to Xun Sun and Yutong Lin for winning the second prize in the 35th Student Laboratory Construction Contribution Award at Tsinghua University for their cloud platform.

Feb 25, 2025 - Our paper "MOONCAKE: Trading More Storage for Less Computation —— A KVCache-centric Architecture for Serving LLM Chatbot" gets 🏆FAST 2025 Best Paper!

Feb 19, 2025 - Our opensource project “KTransformers” has earned 10k Stars⭐ at Github! Congratulations!

Sep 15, 2023 - Wang Leping and his team participated in the “Changchengbei” cyber security competition and won the first place. Congratulations!

Publications

Nov 25, 2025 - Our paper “From Prefix Cache to Fusion RAG Cache: Accelerating LLM Inference in Retrieval-Augmented” is accepted by SIGMOD 26 (The 2026 ACM Special Interest Group on Management of Data)! Congratulations!

Oct 9, 2025 - Our paper “A Declarative Slice Spraying Engine for Performant and Resilient Data Movement in Disaggregated LLM Serving” is accepted by FAISys 2025 (The 1st Frontier AI Systems Workshop)! Congratulations!

Aug 24, 2025 - Our paper “Accelerating Stream Processing Engines via Hardware Offloading” is accepted by SIGMOD 2026!

Jul 15, 2025 - Our paper “KTransformers: Unleashing the Full Potential of CPU/GPU Hybrid Inference for MoE Models” is accepted by SOSP 2025. Congratulations!

Mar 26, 2025 - Our paper “Scalio: Scaling up DPU-based JBOF Key-value Store with NVMe-oF Target Offload” is accepted by OSDI 2025!

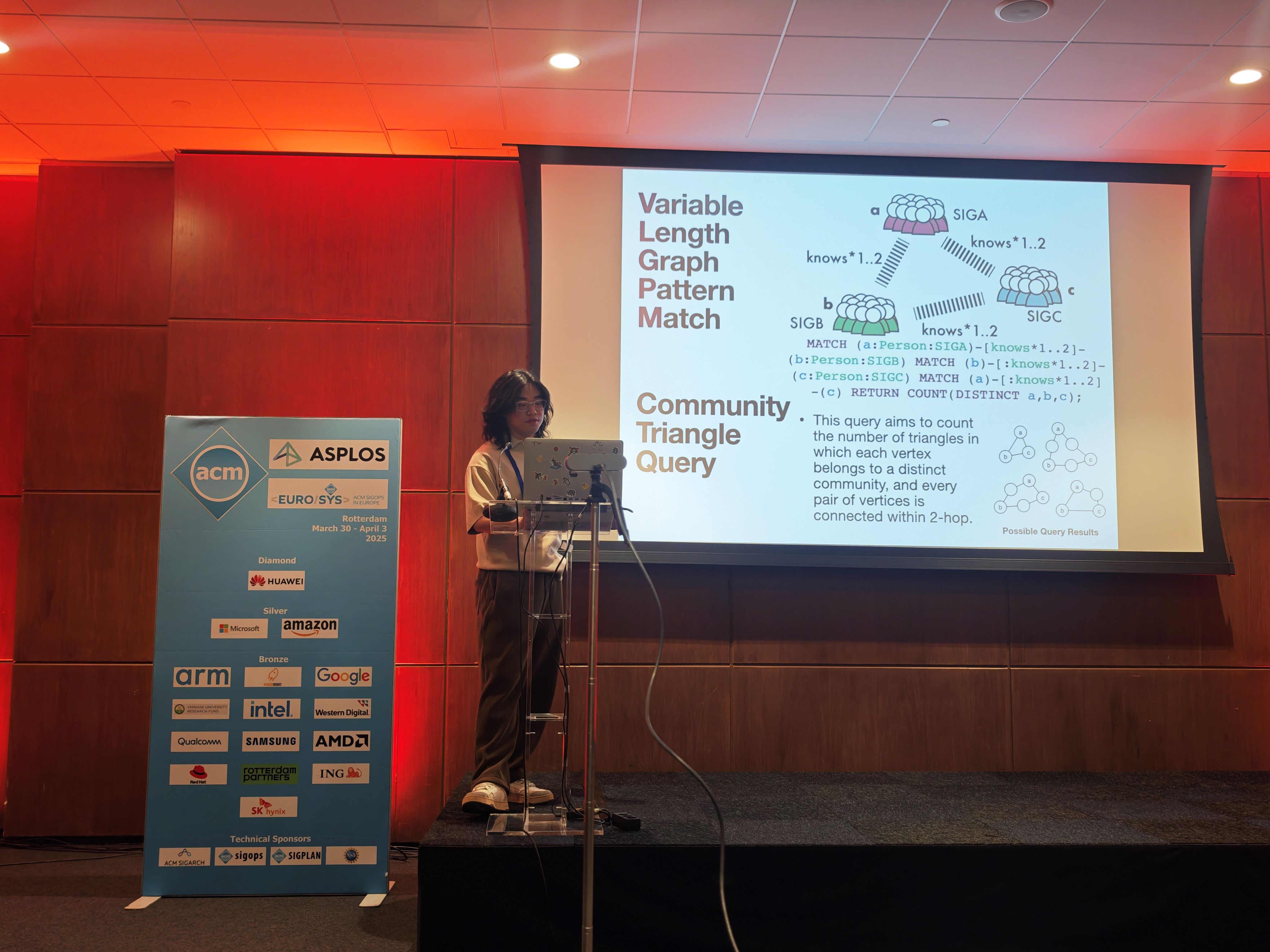

Feb 25, 2025 - Our paper “Scaling Asynchronous Graph Query Processing via Partitioned Stateful Traversal Machines” is accepted by ICDE 2025!

Feb 25, 2025 - Our paper “OOCC: One-round Optimistic Concurrency Control for Read-Only Disaggregated Transactions” is accepted by ICDE 2025!

People

Jan 11, 2026 - The MADSys Laboratory hosted a New Year celebration dinner.

Sep 1, 2025 - Jingqi Tang, Chengyu Qiu, Yuening Zhu, Ruili Liu and Qingliang Ou are welcome to join the MadSys Group.

Jun 15, 2025 - The MADSys Laboratory held the 2024-2025 annual meeting, inviting alumni, graduates and all the faculty and students of the lab!

Sep 1, 2024 - Research Assistant Professor Shan Yingdi joins MADSys Group, Welcome!

Sep 1, 2024 - Sixing Lin, Ruoyu Qin, Boxin Zhang, Jianfeng Li, Jingbo Shan, Jianwei Dong, Chen Lin and Yuanyong Chen are welcome to join the MadSys Group.

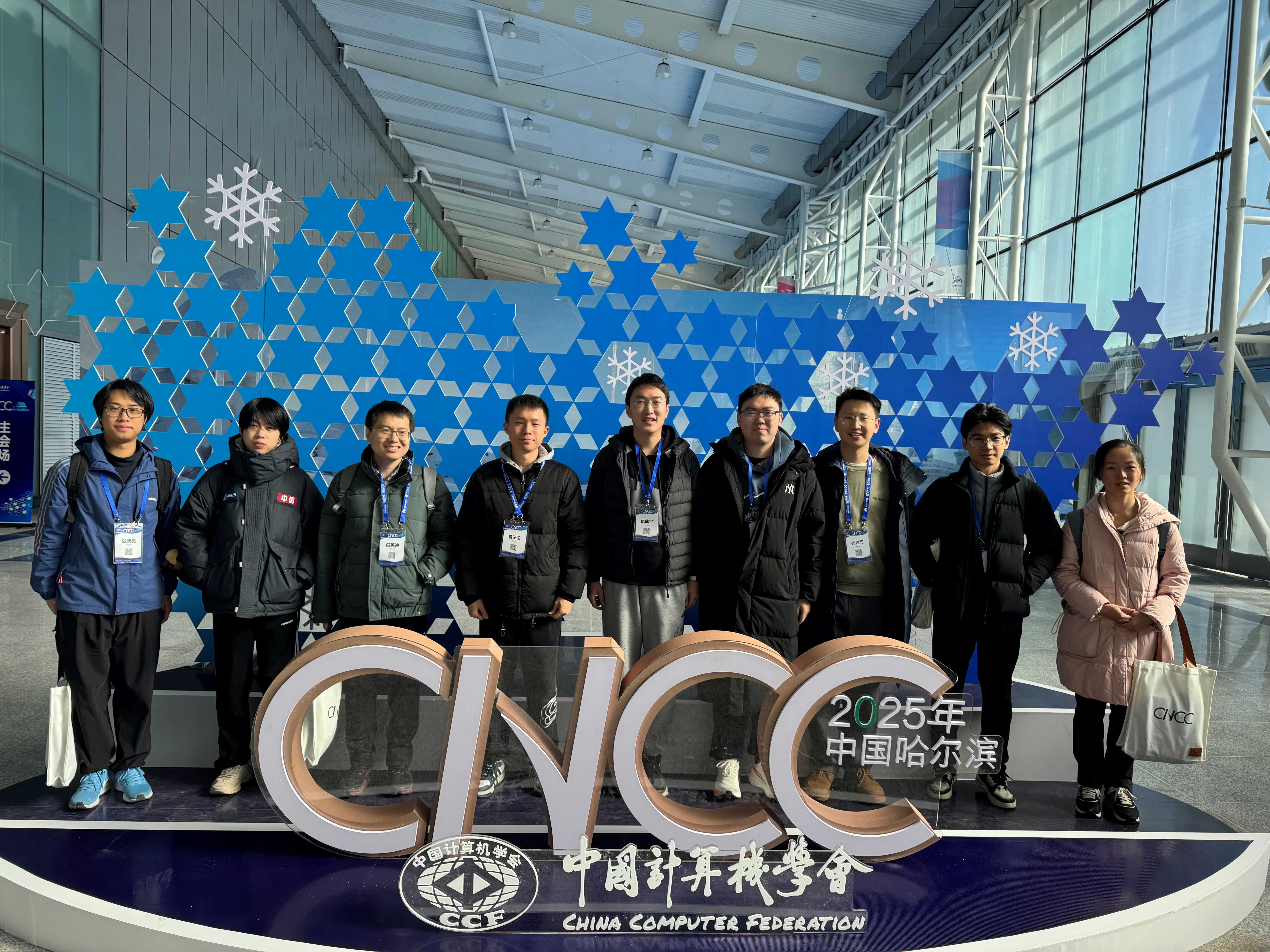

Dec 20, 2023 - Professor Wu Yongwei has been elevated to CCF Fellow. Congratulations!

Sep 1, 2023 - Jinqi Hua, Xun Sun, Shaofeng Ding and Ziyu Zeng are welcome to join the MadSys Group.

Projects

Essentially, a decoder-only Transformer model transforms data from any modality into KVCache, positioning it as a central element in LLM serving optimizations. These optimizations include, but are not limited to, caching, scheduling, compression, and offloading. KVCache.AI is a collaborative endeavor with leading industry partners such as Approaching.AI and Moonshot AI. The project focuses on developing effective and practical techniques that enrich both academic research and open-source development.

Mooncake is the serving platform for Kimi, a leading LLM service provided by MadSys, Moonshot AI et.al.

A Flexible Framework for Experiencing Cutting-edge LLM Inference Optimizations.

Prompted by advancements in modern interconnect technologies like RDMA and CXL, this project aims to revisit the implementation and application of distributed shared memory (DSM). The objective is to facilitate the development of resilient distributed applications that can tolerate partial failures, making this process as straightforward and efficient as programming concurrent applications on a single machine. RDSM is a collaborative endeavor with leading industry partners such as Alibaba and Intel, dedicated to establishing fundamental frameworks that enhance both academic research and open-source development.